Local Services

Run various services locally

Introduction

We rely heavily on Elasticsearch, Redis and Postgres in our applications. While developing, you will be connecting to these services in various locations.

Redis is currently used for authentication, temporary password reset urls, and caching data for migration.

Postgres stores all of our workflow data.

Elasticsearch provides our magic sauce searching capabilities.

Config files

Our various servers connect to services at locations based on config files. At any point you can run connected to local, dev, qa or production. Typically the config files will be named after these namespaces (example: local.js).

The local.js ( local.ts) config file is a special case. As it is in the .gitignore file and not shared with other developers, you can use this config file to point your local development to any combination of services and their locations.

To connect to production services from your local dev machine, you will likely need an ssh tunnel setup and please please please know what you're doing. :)

Docker

The titanhouse-api repo uses a number of docker containers to run various services for develepment locally. A docker-compose.yml file located in the top level defines all the services.

To get up and running:

- Install docker

- In the top level of the

titanhouse-apirep, rundocker-compose up

To show a list of your running containers:

docker ps

To open a terminal of a running container:

docker exec -it [container-id] bash

To exit the container's terminal:

exit

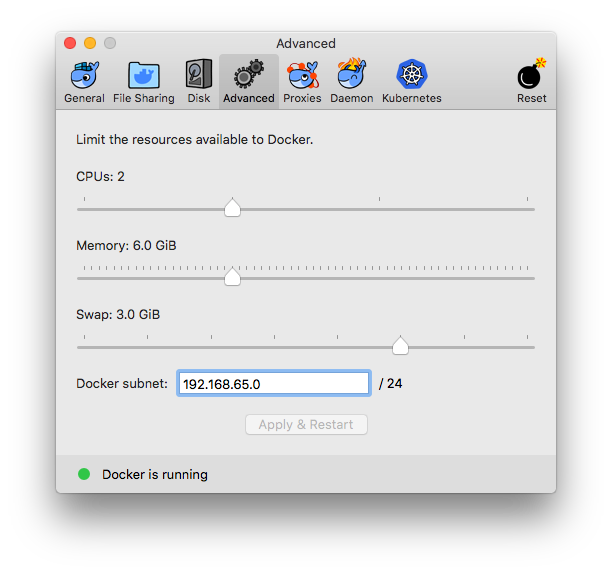

Note: bump up the default docker memory and cpu settings after installation. If you can spare 50% of your computer's resources for docker that doesn't hurt.

Redis

If you open a terminal session into the redis container, the redis-cli is available for running redis commands directly. Usage: https://redis.io/topics/rediscli

Postgres

Connection strings and associated are located in various config files of the titanhouse-api repo.

Even though Postgres is running in a docker container, to use psql and pg_dump from your operating system you will need to install Postgres. On mac:

brew install postgresql.

One recommended database client is Valentina Studio

Load data locally

You will be prompted for the appropriate password for the following commands (see config files).

To dump data from production database:

pg_dump -h instance-dev-uat.cxkzwqegz2la.us-east-1.rds.amazonaws.com -U th_master -d titanhouse --clean | gzip > [some-file-name].gz

To load data to local database:

gunzip -c [some-file-name].gz | psql titanhouse -U postgres -h localhost

This latter command will throw some errors but the data will all get in there.